NOTE: Apart from

You can see how this translation was done in this article.

Friday, 02 August 2024

//11 minute read

Markdown is a lightweight markup language that you can use to add formatting elements to plaintext text documents. Created by John Gruber in 2004, Markdown is now one of the world’s most popular markup languages.

On this site I use a super simple approach to blogging, having tried and failed to maintain a blog in the past I wanted to make it as easy as possible to write and publish posts. I use markdown to write my posts and this site has a single service using Markdig to convert the markdown to HTML.

In a word simplicity. This isn't going to be a super high traffic site, I use ASP.NET OutPutCache to cache the pages and I'm not going to be updating it that often. I wanted to keep the site as simple as possible and not have to worry about the overhead of a static site generator both in terms of the build process and the complexity of the site.

To clarify; static site generators like Hugo / Jekyll etc...can be a good solution for many sites but for this one I wanted to keep it as simple for me as possible. I'm a 25 year ASP.NET veteran so understand it inside and out. This site design does add complexity; I have views, services, controllers and a LOT of manual HTML & CSS but I'm comfortable with that.

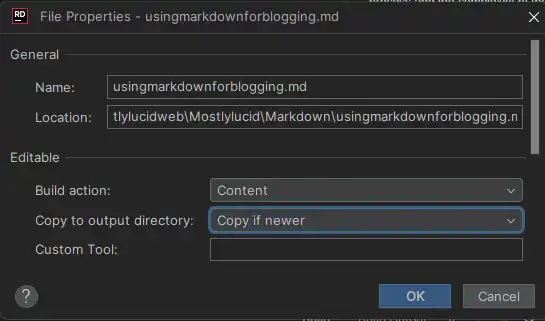

I simply drop a new .md file into the Markdown folder and the site picks it up and renders it (when I remember to aet it as content, this ensures it's avaiable in the output files! )

Then when I checkin the site to GitHub the Action runs and the site is updated. Simple!

Since I just added the image here, I'll show you how I did it. I simply added the image to the wwwroot/articleimages folder and referenced it in the markdown file like this:

I then add an extension to my Markdig pipeline which rewrites these to the correct URL (all about simplicity). See here for the source code for the extension.

using Markdig;

using Markdig.Renderers;

using Markdig.Syntax;

using Markdig.Syntax.Inlines;

namespace Mostlylucid.MarkDigExtensions;

public class ImgExtension : IMarkdownExtension

{

public void Setup(MarkdownPipelineBuilder pipeline)

{

pipeline.DocumentProcessed += ChangeImgPath;

}

public void Setup(MarkdownPipeline pipeline, IMarkdownRenderer renderer)

{

}

public void ChangeImgPath(MarkdownDocument document)

{

foreach (var link in document.Descendants<LinkInline>())

if (link.IsImage)

link.Url = "/articleimages/" + link.Url;

}

}

The BlogService is a simple service that reads the markdown files from the Markdown folder and converts them to HTML using Markdig.

The full source for this is below and here.

using System.Globalization;

using System.Text.RegularExpressions;

using Markdig;

using Microsoft.Extensions.Caching.Memory;

using Mostlylucid.MarkDigExtensions;

using Mostlylucid.Models.Blog;

namespace Mostlylucid.Services;

public class BlogService

{

private const string Path = "Markdown";

private const string CacheKey = "Categories";

private static readonly Regex DateRegex = new(

@"<datetime class=""hidden"">(\d{4}-\d{2}-\d{2}T\d{2}:\d{2})</datetime>",

RegexOptions.Compiled | RegexOptions.IgnoreCase | RegexOptions.NonBacktracking);

private static readonly Regex WordCoountRegex = new(@"\b\w+\b",

RegexOptions.Compiled | RegexOptions.Multiline | RegexOptions.IgnoreCase | RegexOptions.NonBacktracking);

private static readonly Regex CategoryRegex = new(@"<!--\s*category\s*--\s*([^,]+?)\s*(?:,\s*([^,]+?)\s*)?-->",

RegexOptions.Compiled | RegexOptions.Singleline);

private readonly ILogger<BlogService> _logger;

private readonly IMemoryCache _memoryCache;

private readonly MarkdownPipeline pipeline;

public BlogService(IMemoryCache memoryCache, ILogger<BlogService> logger)

{

_logger = logger;

_memoryCache = memoryCache;

pipeline = new MarkdownPipelineBuilder().UseAdvancedExtensions().Use<ImgExtension>().Build();

ListCategories();

}

private Dictionary<string, List<string>> GetFromCache()

{

return _memoryCache.Get<Dictionary<string, List<string>>>(CacheKey) ?? new Dictionary<string, List<string>>();

}

private void SetCache(Dictionary<string, List<string>> categories)

{

_memoryCache.Set(CacheKey, categories, new MemoryCacheEntryOptions

{

AbsoluteExpirationRelativeToNow = TimeSpan.FromHours(12)

});

}

private void ListCategories()

{

var cacheCats = GetFromCache();

var pages = Directory.GetFiles("Markdown", "*.md");

var count = 0;

foreach (var page in pages)

{

var pageAlreadyAdded = cacheCats.Values.Any(x => x.Contains(page));

if (pageAlreadyAdded) continue;

var text = File.ReadAllText(page);

var categories = GetCategories(text);

if (!categories.Any()) continue;

count++;

foreach (var category in categories)

if (cacheCats.TryGetValue(category, out var pagesList))

{

pagesList.Add(page);

cacheCats[category] = pagesList;

_logger.LogInformation("Added category {Category} for {Page}", category, page);

}

else

{

cacheCats.Add(category, new List<string> { page });

_logger.LogInformation("Created category {Category} for {Page}", category, page);

}

}

if (count > 0) SetCache(cacheCats);

}

public List<string> GetCategories()

{

var cacheCats = GetFromCache();

return cacheCats.Keys.ToList();

}

public List<PostListModel> GetPostsByCategory(string category)

{

var pages = GetFromCache()[category];

return GetPosts(pages.ToArray());

}

public BlogPostViewModel? GetPost(string postName)

{

try

{

var path = System.IO.Path.Combine(Path, postName + ".md");

var page = GetPage(path, true);

return new BlogPostViewModel

{

Categories = page.categories, WordCount = WordCount(page.restOfTheLines), Content = page.processed,

PublishedDate = page.publishDate, Slug = page.slug, Title = page.title

};

}

catch (Exception e)

{

_logger.LogError(e, "Error getting post {PostName}", postName);

return null;

}

}

private int WordCount(string text)

{

return WordCoountRegex.Matches(text).Count;

}

private string GetSlug(string fileName)

{

var slug = System.IO.Path.GetFileNameWithoutExtension(fileName);

return slug.ToLowerInvariant();

}

private static string[] GetCategories(string markdownText)

{

var matches = CategoryRegex.Matches(markdownText);

var categories = matches

.SelectMany(match => match.Groups.Cast<Group>()

.Skip(1) // Skip the entire match group

.Where(group => group.Success) // Ensure the group matched

.Select(group => group.Value.Trim()))

.ToArray();

return categories;

}

public (string title, string slug, DateTime publishDate, string processed, string[] categories, string

restOfTheLines) GetPage(string page, bool html)

{

var fileInfo = new FileInfo(page);

// Ensure the file exists

if (!fileInfo.Exists) throw new FileNotFoundException("The specified file does not exist.", page);

// Read all lines from the file

var lines = File.ReadAllLines(page);

// Get the title from the first line

var title = lines.Length > 0 ? Markdown.ToPlainText(lines[0].Trim()) : string.Empty;

// Concatenate the rest of the lines with newline characters

var restOfTheLines = string.Join(Environment.NewLine, lines.Skip(1));

// Extract categories from the text

var categories = GetCategories(restOfTheLines);

var publishedDate = fileInfo.CreationTime;

var publishDate = DateRegex.Match(restOfTheLines).Groups[1].Value;

if (!string.IsNullOrWhiteSpace(publishDate))

publishedDate = DateTime.ParseExact(publishDate, "yyyy-MM-ddTHH:mm", CultureInfo.InvariantCulture);

// Remove category tags from the text

restOfTheLines = CategoryRegex.Replace(restOfTheLines, "");

restOfTheLines = DateRegex.Replace(restOfTheLines, "");

// Process the rest of the lines as either HTML or plain text

var processed =

html ? Markdown.ToHtml(restOfTheLines, pipeline) : Markdown.ToPlainText(restOfTheLines, pipeline);

// Generate the slug from the page filename

var slug = GetSlug(page);

// Return the parsed and processed content

return (title, slug, publishedDate, processed, categories, restOfTheLines);

}

public List<PostListModel> GetPosts(string[] pages)

{

List<PostListModel> pageModels = new();

foreach (var page in pages)

{

var pageInfo = GetPage(page, false);

var summary = Markdown.ToPlainText(pageInfo.restOfTheLines).Substring(0, 100) + "...";

pageModels.Add(new PostListModel

{

Categories = pageInfo.categories, Title = pageInfo.title,

Slug = pageInfo.slug, WordCount = WordCount(pageInfo.restOfTheLines),

PublishedDate = pageInfo.publishDate, Summary = summary

});

}

pageModels = pageModels.OrderByDescending(x => x.PublishedDate).ToList();

return pageModels;

}

public List<PostListModel> GetPostsForFiles()

{

var pages = Directory.GetFiles("Markdown", "*.md");

return GetPosts(pages);

}

}

As you can see this has a few elements:

The code to process the markdown files to HTML is pretty simple, I use the Markdig library to convert the markdown to HTML and then I use a few regular expressions to extract the categories and the published date from the markdown file.

The GetPage method is used to extract the content of the markdown file, it has a few steps:

var lines = File.ReadAllLines(page);

// Get the title from the first line

var title = lines.Length > 0 ? Markdown.ToPlainText(lines[0].Trim()) : string.Empty;

As the title is prefixed with "#" I use the Markdown.ToPlainText method to strip the "#" from the title.

// Concatenate the rest of the lines with newline characters

var restOfTheLines = string.Join(Environment.NewLine, lines.Skip(1));

// Extract categories from the text

var categories = GetCategories(restOfTheLines);

// Remove category tags from the text

restOfTheLines = CategoryRegex.Replace(restOfTheLines, "");

The GetCategories method uses a regular expression to extract the categories from the markdown file.

private static readonly Regex CategoryRegex = new(@"<!--\s*category\s*--\s*([^,]+?)\s*(?:,\s*([^,]+?)\s*)?-->",

RegexOptions.Compiled | RegexOptions.Singleline);

private static string[] GetCategories(string markdownText)

{

var matches = CategoryRegex.Matches(markdownText);

var categories = matches

.SelectMany(match => match.Groups.Cast<Group>()

.Skip(1) // Skip the entire match group

.Where(group => group.Success) // Ensure the group matched

.Select(group => group.Value.Trim()))

.ToArray();

return categories;

}

private static readonly Regex DateRegex = new(

@"<datetime class=""hidden"">(\d{4}-\d{2}-\d{2}T\d{2}:\d{2})</datetime>",

RegexOptions.Compiled | RegexOptions.IgnoreCase | RegexOptions.NonBacktracking);

var publishedDate = fileInfo.CreationTime;

var publishDate = DateRegex.Match(restOfTheLines).Groups[1].Value;

if (!string.IsNullOrWhiteSpace(publishDate))

publishedDate = DateTime.ParseExact(publishDate, "yyyy-MM-ddTHH:mm", CultureInfo.InvariantCulture);

restOfTheLines = DateRegex.Replace(restOfTheLines, "");

pipeline = new MarkdownPipelineBuilder().UseAdvancedExtensions().Use<ImgExtension>().Build();

var processed =

html ? Markdown.ToHtml(restOfTheLines, pipeline) : Markdown.ToPlainText(restOfTheLines, pipeline);

Get the 'slug' This is simply the filename without the extension:

private string GetSlug(string fileName)

{

var slug = System.IO.Path.GetFileNameWithoutExtension(fileName);

return slug.ToLowerInvariant();

}

Return the content Now we have page content we can display for the blog!

public (string title, string slug, DateTime publishDate, string processed, string[] categories, string

restOfTheLines) GetPage(string page, bool html)

{

var fileInfo = new FileInfo(page);

// Ensure the file exists

if (!fileInfo.Exists) throw new FileNotFoundException("The specified file does not exist.", page);

// Read all lines from the file

var lines = File.ReadAllLines(page);

// Get the title from the first line

var title = lines.Length > 0 ? Markdown.ToPlainText(lines[0].Trim()) : string.Empty;

// Concatenate the rest of the lines with newline characters

var restOfTheLines = string.Join(Environment.NewLine, lines.Skip(1));

// Extract categories from the text

var categories = GetCategories(restOfTheLines);

var publishedDate = fileInfo.CreationTime;

var publishDate = DateRegex.Match(restOfTheLines).Groups[1].Value;

if (!string.IsNullOrWhiteSpace(publishDate))

publishedDate = DateTime.ParseExact(publishDate, "yyyy-MM-ddTHH:mm", CultureInfo.InvariantCulture);

// Remove category tags from the text

restOfTheLines = CategoryRegex.Replace(restOfTheLines, "");

restOfTheLines = DateRegex.Replace(restOfTheLines, "");

// Process the rest of the lines as either HTML or plain text

var processed =

html ? Markdown.ToHtml(restOfTheLines, pipeline) : Markdown.ToPlainText(restOfTheLines, pipeline);

// Generate the slug from the page filename

var slug = GetSlug(page);

// Return the parsed and processed content

return (title, slug, publishedDate, processed, categories, restOfTheLines);

}

The code below shows how I generate the list of blog posts, it uses the GetPage(page, false) method to extract the title, categories, published date and the processed content.

public List<PostListModel> GetPosts(string[] pages)

{

List<PostListModel> pageModels = new();

foreach (var page in pages)

{

var pageInfo = GetPage(page, false);

var summary = Markdown.ToPlainText(pageInfo.restOfTheLines).Substring(0, 100) + "...";

pageModels.Add(new PostListModel

{

Categories = pageInfo.categories, Title = pageInfo.title,

Slug = pageInfo.slug, WordCount = WordCount(pageInfo.restOfTheLines),

PublishedDate = pageInfo.publishDate, Summary = summary

});

}

pageModels = pageModels.OrderByDescending(x => x.PublishedDate).ToList();

return pageModels;

}

public List<PostListModel> GetPostsForFiles()

{

var pages = Directory.GetFiles("Markdown", "*.md");

return GetPosts(pages);

}